Dissection of a Mid-term Exam

It’s mid-term exam season at William & Mary. On Friday, October 1st 170 students in my Earth’s Environmental Systems course sat for their first exam. This is an introductory class that enrolls a diverse group of students. Many are there to fulfill a General Education Requirement in the physical sciences, many are fresh men and women exploring something new, while others are interested in pursuing a major in environmental studies or geology.

We’d been together for more than a month; students had done in-class activity after in-class activity, they had taken quizzes and completed their first problem set, but the first exam is a different beast. My exam contained a delightful mixture of goodies- terms to be defined, images of the Earth to be analyzed, units to be converted, graphs to be graphed, and a smattering of short and long response questions. The interval between 9 and 9:50 a.m. passed quickly and harried students turned in their exam as time ran out.

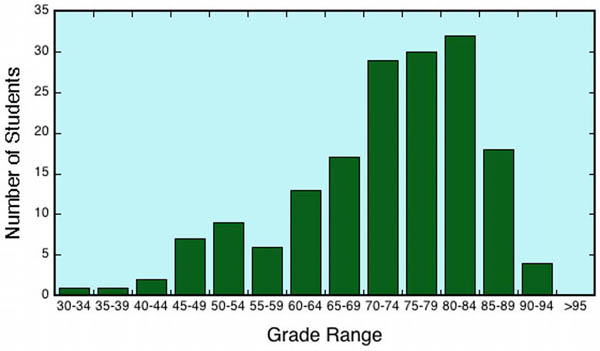

It took nearly a week for me to grade the exams and I delivered up graded exams on the day before Fall Break. The mean score was 72%. Four students achieved 90% or better and the high grade was 94%. Nearly two-thirds of the class scored in the 70’s and 80’s, but 15% of the class earned less than a 60%. What to make of these numbers and percentages? I was disappointed with the overall performance of the class and many students were disappointed with their own performance.

I don’t explicitly assign a letter grade to the numeric grade. Based on a rigid 10-point grade scale there would be just 4 A’s and 27 F’s for the class – ouch! From my perspective, anybody that earned an 85% or better did excellent work. Those who trolled the depths below 60% did poorly. I’ve taught this course for over a decade, and the class average and numeric distribution for this exam was discernibly lower than in past years. Did I write a longer and harder exam? I hope not. Although my exam questions change from year to year, exams are invariably of the same length and follow a similar style every time.

A closer look at the exam offers insight that may be useful later this semester. The definitions part of the exam (where I ask students to define terms we’ve used) was worth 15 points; the mean score was a 9- that’s a mere 60%. Knowing the lexicon is important, and writing definitions that are accurate requires no critical thinking and should not be difficult. Many students thought they knew terms but spent no time writing out definitions as practice. The pictures part of the exam (where I pose a question based on a projected image of the Earth system) was worth 12 points and the class averaged a 9.6 (80%). During nearly every class we practiced answering these visual questions, and that practice seems to have paid off.

Question #5 dealt with solar incidence angles, day length, and annual solar insolation curves at the Arctic Circle and in Williamsburg respectively. The class average on this question was poor (61%). Although we had covered and calculated solar incidence for various points on the planet, I cast this question differently than in previous examples. I don’t want students to merely know ‘facts’ I want students to apply knowledge – question #5 required students to apply their knowledge in a different way and proved difficult for most. On the longer response questions students had a choice to do two of three, and the overall class average on these questions was above 80%- an encouraging result.

For many, this exam was the first they’d taken in college and as such was an unknown, and perhaps even frightening, entity. I like to think there will be some comfort in knowing what to expect next time. Others struggled to complete the exam in the allotted time, and some left questions blank as time ran out; unfortunately our class occupies a 50-minute time slot and time management is critical.

The good news is that the first exam counts for just 10% of the overall class grade, and the second exam and final exam are collectively worth 42%. Historically, students do better and better on exams as the semester progresses. Dissecting one’s own exam is worthwhile; evaluate the weak areas, think about strategies to improve, and, as the saying goes, “practice, practice, practice”.

Comments are currently closed. Comments are closed on all posts older than one year, and for those in our archive.

It’s nice to be able to see where my grade sits in relation to the rest of the class. I’m definitely going to study more for the IDs this time and spend more time on the reading. Thanks Prof.

p.s. i wonder if it’s ok for me to say……unicorn

Yeah, thanks Chuck for the dissection.

p.s.-Watch out Mina . . . the administration may come after you too.

It’s a wonder that some of your students have made it as far as they have {me included}. It’s heartening to me that you are getting some new students. I personally thank you for not sticking to a fixed grading rubric.

Have Fun!

The point breakdown is really helpful!

P.S. Unicorn.

Professor Bailey,

How do the midterm grades compare to the problem set you mentioned? Do the students perform similarly in both venues? I’m also very interested in a follow up after the next exam- does your advice help the students to improve?